Go to

Jason Cong

Chancellor's Professor

Chancellor's Professor

Director, VLSI Architecture, Synthesis and Technology (VAST) Laboratory

Computer Science Department

University of California, Los Angeles, California, USA

Customizable Computing: from Single-Chips to Data Centers

Monday, 10 October 2016 at 14:50

Abstract:

With Intel’s $17B acquistion of Altera completed in December 2015, customizable computing is going from advanced research projects into mainstream technologies. However, many research problems are still open, such as what is the best architecture integration of CPUs and FPGAs, what are the most efficient programming models and compilation flows for customizable computing, and how can one manage such heterogeneous architectures during runtime at cloud scale. In this talk, I shall present the ongoing research in these areas and demonstrate the success of customized computing at chip-level, server-level, and datacenter-level, using examples from multiple application domains, including machine learning and computational genomics.

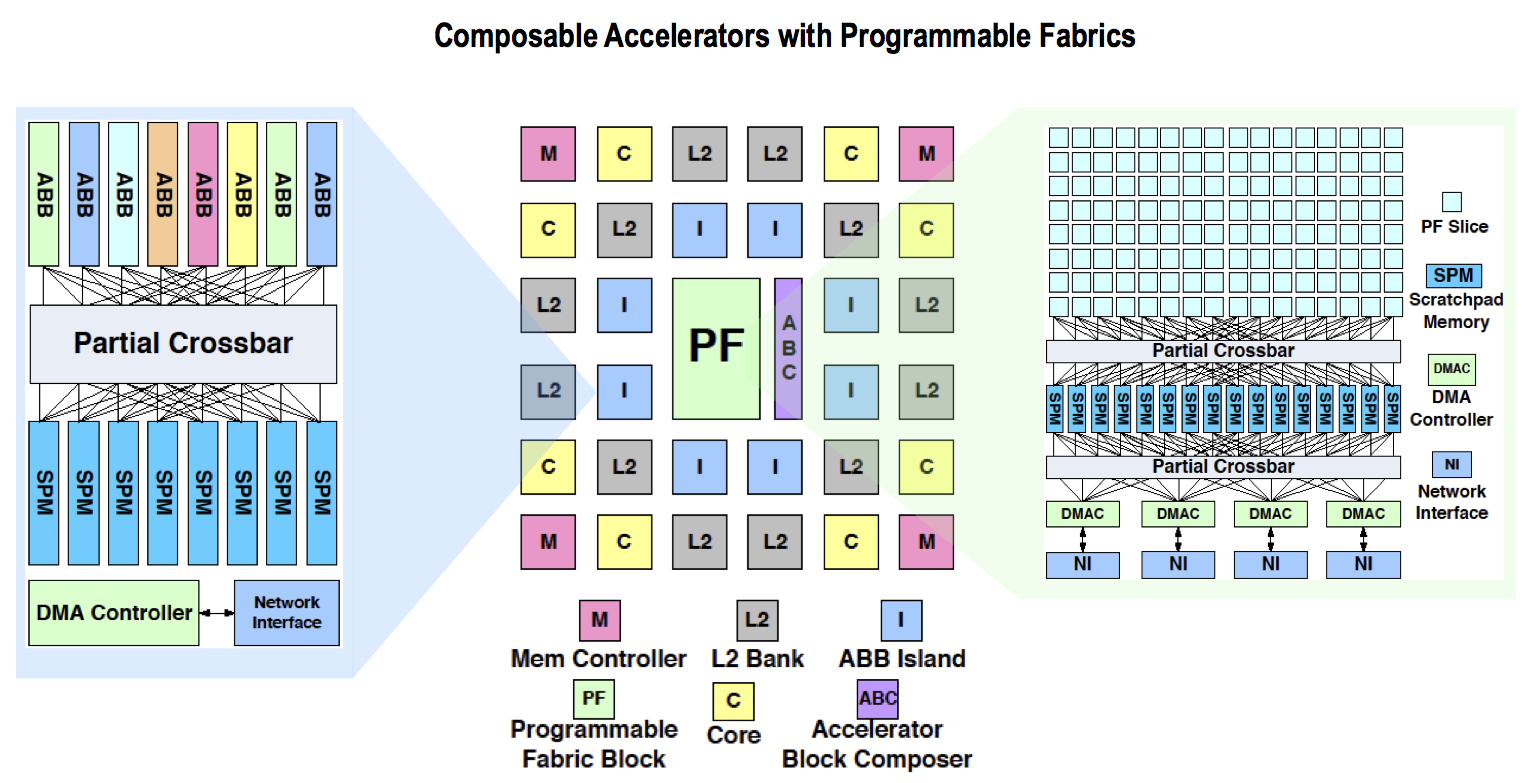

At the chip-level, we have proposed the idea of accelerator-rich architectures (ARAs) and explored the opportunities of accelerators with fixed-functions, composable functionalities and reprogrammable fabrics. Recently, we have open-sourced our PARADE simulator to stimulate more researches in ARAs [1]. We have also studied the customized interconnect between different accelerators, and provided address translation support between CPU cores and accelerators. At the server-level, we consider CPU+FPGA platforms for application acceleration. One recent focus is on the unified representation and acceleration of both convolutional layers and fully connected layers in CNN and DNN in a single FPGA [2]. We have developed a hardware-software co- designed CNN/DNN library called Caffeine and integrated with industry-standard machine learning framework Caffe with 43.5x and 1.5x energy gains over a 12-core CPU and K40 GPU using a medium-sized FPGA. At the datacenter-level, we have explored the architecture opportunities from low-power field-programmable SoCs to server-class computer nodes plus high-capacity FPGAs. Moreover, we have developed the Merlin compiler and Blaze runtime (in collaboration with Falcon Computing Solutions, Inc. [4]) to better support the programming and deployment of customized accelerators at datacenter scale [3]. By integrating the FPGAs into big data frameworks like Apache Spark and Yarn, we achieve a 1.7x to 3x performance speedup for a range of machine learning and computational genomics applications.

References

1. Cong et al. PARADE: A Cycle-Accurate Full-System Simulation Platform for Accelerator-Rich Architectural Design and Exploration. The 2015 IEEE/ACM International Conference on Computer-Aided Design (ICCAD 2015), 2015.

2. Zhang et al. Caffeine: Towards Uniformed Representation and Acceleration for Deep Convolutional Neural Networks. To appear in the 2016 IEEE/ACM International Conference on Computer-Aided Design (ICCAD 2016), 2016.

3. Huang et al. To appear in the ACM Symposium on Cloud Computing (ACM SoCC 2016), 2016.

4. http://www.falcon-computing.com

About the speaker:

Jason Cong received his B.S. degree in computer science from Peking University in 1985, his M.S. and Ph. D. degrees in computer science from the University of Illinois at Urbana-Champaign in 1987 and 1990, respectively. Currently, he is a Chancellor’s Professor at the UCLA Computer Science Department, the director of Center for Domain-Specific Computing (CDSC). He served as the department chair from 2005 to 2008. Dr. Cong’s research interests include electronic design automation, energy-efficient computing, customized computing for big-data applications, and highly scalable algorithms. He has over 400 publications in these areas, including 10 best paper awards, and the 2011 ACM/IEEE A. Richard Newton Technical Impact Award in Electric Design Automation. He was elected to be an IEEE Fellow in 2000 and ACM Fellow in 2008. He is the recipient of the 2010 IEEE Circuits and System Society Technical Achievement Award "For seminal contributions to electronic design automation, especially in FPGA synthesis, VLSI interconnect optimization, and physical design automation."

Dr. Cong has graduated 33 PhD students. Nine of them are now faculty members in major research universities, including Cornell, Fudan Univ., Georgia Tech., Peking Univ., Purdue, SUNY Binghamton, UCLA, UIUC, and UT Austin. One of them is now an IEEE Fellow, six of them got the highly competitive NSF Career Award, and one of them received the ACM SIGDA Outstanding Dissertation Award. Dr. Cong has successfully co-founded three companies with his students, including Aplus Design Technologies for FPGA physical synthesis and architecture evaluation (acquired by Magma in 2003, now part of Synopsys), AutoESL Design Technologies for high-level synthesis (acquired by Xilinx in 2011), and Neptune Design Automation for ultra-fast FPGA physical design (acquired by Xilinx in 2013). Currently, he is a co-founder and the chief scientific advisor of Falcon Computing Solutions, a startup dedicated to enabling FPGA-based customized computing in data centers.

Secondary navigation

- EPFL Workshop on Logic Synthesis and Emerging Technologies

- Luca Amaru

- Luca Benini

- Giovanni De Micheli

- Srini Devadas

- Antun Domic

- Rolf Drechsler

- Pierre-Emmanuel Gaillardon

- Jie-Hong Roland Jiang

- Akash Kumar

- Shahar Kvatinsky

- Yusuf Leblebici

- Shin-ichi Minato

- Alan Mishchenko

- Vijaykrishnan Narayanan

- Ian O'Connor

- Andre Inacio Reis

- Martin Roetteler

- Julien Ryckaert

- Mathias Soeken

- Christof Teuscher

- Zhiru Zhang

- Symposium on Emerging Trends in Computing

- Layout synthesis: A golden DA topic

- EPFL Workshop on Logic Synthesis & Verification

- Luca Amaru

- Luca Benini

- Robert Brayton

- Maciej Ciesielski

- Valentina Ciriani

- Jovanka Ciric-Vujkovic

- Jason Cong

- Jordi Cortadella

- Giovanni De Micheli

- Antun Domic

- Rolf Drechsler

- Henri Fraisse

- Paolo Ienne

- Viktor Kuncak

- Enrico Macii

- Igor Markov

- Steven M. Nowick

- Tsutomu Sasao

- Alena Simalatsar

- Leon Stok

- Dirk Stroobandt

- Tiziano Villa

- Symposium on Emerging Trends in Electronics

- Raul Camposano

- Anantha Chandrakasan

- Jo De Boeck

- Gerhard Fettweis

- Steve Furber

- Philippe Magarshack

- Takayasu Sakurai

- Alberto Sangiovanni-Vincentelli

- Ken Shepard

- VENUE

- Panel on Circuits in Emerging Nanotechnologies

- Panel on Emerging Methods of Computing

- Panel on The Role of Universities in the Emerging ICT World

- Panel on Design Challenges Ahead

- Panel on Alternative Use of Silicon

- Nano-Bio Technologies for Lab-on-Chip

- Functionality-Enhanced Devices Workshop

- More Moore: Designing Ultra-Complex System-on-Chips

- Design Technologies for a New Era

- Nanotechnology for Health

- Secure Systems Design

- Surface Treatments and Biochip Sensors

- Security/Privacy of IMDs

- Nanosystem Design and Variability

- Past Events Archive